Networking Essentials: Rate Limiting and Traffic Shaping

This is the seventh in a series of class notes as I go through the free Udacity Computer Networking Basics course.

Now that we have explored how TCP Congestion Control works, we might think we understand how Internet traffic is formed. But Congestion Control is very low level - there are still high level limits we put on Internet traffic to address resource or business constraints. We call this Rate Limiting and Traffic Shaping.

Ways to classify Traffic

All traffic is not created equal. Transferring Data is often bursty in terms of traffic needs. Transferring Audio is usually continuous as you are streaming down a fairly constant set of data. Transferring Video be can continuous or bursty due to compression.

The high-level way to classify traffic comes down to:

- Constant Bit Rate (CBR): traffic arrives at regular intervals, and packets are roughly the same size, which leads to a constant bitrate (e.g. audio). We shape CBR traffic according to their peak load.

- Variable Bit Rate (VBR): all variable (e.g. video and data). We shape VBR traffic according to both their peak and their average load.

Traffic Shaping Approaches

There are three we will cover:

- Leaky Bucket

- (r, t) shaping

- Token Bucket

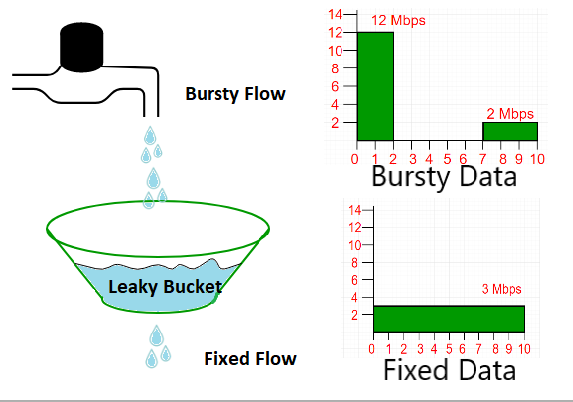

Leaky Bucket Traffic Shaping

A Leaky Bucket turns a bursty flow in to a regular flow. The two relevant parameters are bucket size and drain rate. The drain or “leak” acts as the “regulator” on the system. You want to set the drain rate at the average rate of the incoming flow, and the bucket size according to the max size of burst you expect. Overflows of the bucket are discarded or placed at a lower priority.

Leaky Bucket was developed in 1986 and you can read further here.

(R, T) Traffic Shaping

This variant deals with constant flows best. It works like:

- Divide traffic into

T-bit frames - Inject up to

Rbits in anyT-bit frame

If a sender wants to send more than R bits, it has to wait until the next T bit frame. A flow that follows this rule is called an “(R,T) smooth traffic shape”.

So the max packet size is R * T which usually isn’t very large, and the range of behaviors is limited to fixed rate flows (CBR, above). Variable flows (VBR) have to request data rates (R*T) that are equal to the peak rate which is very wasteful.

If a flow exceeds the R,T rate, the excess packets are assigned a lower priority, or dropped. Priorities are assigned by either the sender (preferred, since it knows its own priorities best) or the network (this is known as policing.

Token Bucket Traffic Shaping

In a Token Bucket, tokens arrive at a rate R, and the bucket can take B tokens. These two parameters determine the Token Bucket regulator.

The bursty flow has two parameters Lpeak and Lavg to describe it. It flows into the regulator similar to how it flows into the Leaky Bucket regulator.

The difference here is, traffic in a Token Bucket can be sent by the regulator as long as there is a token for it. So the Token Bucket kind of works like the opposite of the Leaky Bucket. If a packet of size b comes along:

- If the bucket is full, the packet is sent and

btokens are removed. - If the bucket is empty, the packet must wait until

btokens drip into the bucket. - If it is partially full, it also waits until the number of tokens in the bucket rises to exceed

b.

Token Buckets permit variable/bursty flows by bounding it rather than always smoothing it like with the Leaky Bucket. They have no discard or priority policies whereas Leaky Buckets do. Because they can end up “monopolizing” a network, there is a need to police Token Buckets by combining a Token Bucket and a Leaky Bucket into a Composite Shaper.

An interesting technique: Power Boost

First implemented by Comcast in 2006, Power Boost allowed users to send at a higher rate for a brief time. This takes advantaged of spare capacity for users who do not put sustained load on a network. It can be “capped” or “uncapped”. The amount of the boost is measurable by the excess speed over the rate that the user has subscribed for, and can be regulated by a Token Bucket. A cap is implemented by a second Token Bucket on the amount of the boost.

Power Boosting can have an impact on latency if inbound traffic exceeds router capabilities and buffers start filling up, so implementing a traffic shaper in front of a power boost may help ameliorate this issue. More in this SIGCOMM11 paper.

The initiative was ended in 2013.

An interesting problem: Buffer Bloat

Large buffers are actually a problem in home routers, hosts, switches and access points, especially when combined with Power Boosting. A period of high inflow (potentially mixing data with other users) exceeding the drain rate of the buffer can cause data to be stalled in the buffer, introducing unnecessary latency that can be a problem for time sensitive applications like voice and video.

It is impractical to try to reduce buffer size everywhere, so the solution to buffer bloat is to use Traffic Shaping methods like the ones we learned above to ensure that the buffer never fills.

Network Measurement

How do you “see” what traffic is being sent on the network?

- Passive: collection of packets and flow statistics that are already on the network e.g. packet traces

- Active: inject additional traffic to measure various characteristics e.g.

pingandtraceroute

Measuring traffic is important for billing your users via traffic. In practice, companies charge based on the 95th percentile rate (measured every 5 minutes) to allow for some burstiness, also known as the Committed Information Rate.

Measuring traffic is also helpful for security: detecting compromised hosts, botnets, and DDoS attacks.

Passive Measurement

SNMP

Most networks have the Simple Network Management Protocol built in which provides a Management Info Base of data you can analyze. By periodically polling the MIB from a device for the byte and packet counts it sends, it can determine the rate it is sending data. But because the data is so coarse, you can’t do more sophisticated queries than that.

Packet Monitoring

This involves inspecting packet headers and contents that pass through your machine with tools like tcpdump, ethereal, and wireshark, or hardware devices mounted alongside servers in the network. This provides a LOT of detail including timing and destination information, however that much detail is also high overhead, sometimes requiring a dedicated monitoring card.

Flow Monitoring

A monitor (possibly running on the router itself) records statistics per flow. A flow is defined by:

- Common source and destination IP

- Common source and destination port

- Common protocol type

- Common TOS byte

- Common interface

The flow may also have other information like:

- Next-hop IP address

- source/destination AS or prefix

Grouping of packets into flows based on these identifiers as well as based on time proximity is common.

To reduce monitoring overhead, sampling may also be done, by creating flows only on 1 out of every 10 or 100 packets (possibly randomly sampling).

Next in our series

Hopefully this has been a good high level overview of how Networks shape, police, measure, and limit the traffic that flow across them. I am planning more primers and would love your feedback and questions on: